Benchmarking Microbial Network Inference Algorithms: A 2025 Guide for Robust and Reproducible Analysis

This article provides a comprehensive guide for researchers and drug development professionals on the current state of benchmarking microbial network inference algorithms.

Benchmarking Microbial Network Inference Algorithms: A 2025 Guide for Robust and Reproducible Analysis

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on the current state of benchmarking microbial network inference algorithms. It covers the foundational principles of microbial co-occurrence networks and their importance in understanding health and disease. The piece explores the diverse methodological landscape, from correlation-based to conditional dependence-based approaches, and introduces robust validation frameworks like cross-validation and benchmark suites. It addresses critical troubleshooting challenges such as data sparsity, compositionality, and environmental confounders. Finally, it offers a comparative analysis of algorithm performance, consensus methods, and practical recommendations for selecting and applying these tools to generate biologically meaningful insights in biomedical research.

The What and Why: Understanding Microbial Networks and the Critical Need for Benchmarking

Microbial co-occurrence networks have emerged as a powerful computational framework for unraveling the complex ecological relationships within microbial communities across diverse environments, from anaerobic digestion systems to the human gut. These networks represent microbial taxa as nodes and their statistically inferred associations as edges, creating a visual and mathematical representation of potential ecological interactions [1] [2]. The construction of these networks typically involves identifying keywords or taxonomic units in the data, calculating frequencies of co-occurrences, and analyzing the resulting networks to identify central elements and clustered themes [2]. This approach has become increasingly vital in microbiome research as it moves beyond simple compositional analysis to reveal the intricate interplay between community members that underpins ecosystem functioning and stability [3].

The fundamental unit of these networks consists of nodes (representing microbial taxa, genes, or metabolites) and edges (representing statistically significant relationships between them) [1] [3]. These edges can be classified as either positive or negative, potentially indicating various ecological relationships such as mutualism, commensalism, competition, or predation [1]. Depending on the analytical approach, networks can be "weighted" to show relationship strength, "signed" to display both positive and negative associations, or "directed" to indicate interaction directionality, though most microbial networks are undirected due to the difficulty in establishing causal relationships from sequencing data alone [3].

Table 1: Fundamental Components of Microbial Co-occurrence Networks

| Component | Description | Ecological Interpretation |

|---|---|---|

| Nodes | Represent microbial taxa, genes, metabolites, or other compositional properties | Individual microbial entities or functional units within the community |

| Edges | Statistically significant relationships between nodes inferred from abundance patterns | Potential ecological interactions (competition, cooperation, cross-feeding) |

| Positive Edges | Significant co-occurrence or co-abundance between nodes | Potential mutualism, commensalism, or shared niche preference |

| Negative Edges | Significant mutual exclusion or anti-correlation between nodes | Potential competition, antagonism, or distinct environmental preferences |

| Node Degree | Number of connections a node has to other nodes | Indicator of a taxon's connectivity within the community |

| Betweenness Centrality | Number of shortest paths passing through a node | Measure of a node's role as a connector between different network modules |

Network Construction Methodologies

Data Preparation and Preprocessing

The construction of robust microbial co-occurrence networks requires careful data preparation to avoid technical artifacts and spurious associations. The initial step involves taxonomic agglomeration, where microbial sequences are clustered into operational taxonomic units (OTUs) at 97% sequence similarity or as amplicon sequence variants (ASVs) based on single-nucleotide differences [1]. This decision fundamentally affects network interpretation, as higher taxonomic grouping (e.g., genus or class level) reduces dataset complexity but may obscure species-level interactions [1] [4]. Subsequent data filtering addresses the challenge of zero-inflated microbiome data by applying prevalence thresholds (typically 10-60% across samples) to remove rare taxa that could introduce spurious correlations [1]. This represents a critical trade-off between inclusivity and accuracy, as stringent filtering may remove ecologically important rare taxa while lenient thresholds increase false positive rates [1] [4].

The compositional nature of microbiome sequencing data presents particular challenges, as counts represent proportions rather than absolute abundances, violating assumptions of traditional correlation analysis [1] [3]. Solutions include applying the center-log ratio transformation to remove dependencies between proportions [1] or using Dirichlet multinomial models that directly account for compositionality [1]. For inter-kingdom networks involving bacteria, archaea, and fungi, datasets must be transformed independently before concatenation to avoid introducing bias and spurious edges [1]. Rarefaction is commonly employed to address uneven sequencing depth, though its appropriateness remains debated, with different association measures showing varying robustness to this procedure [1].

Association Inference and Network Construction

The core of network construction lies in estimating robust associations between microbial entities. Multiple approaches exist, each with distinct advantages and limitations. Correlation-based methods include Pearson's or Spearman's correlation coefficients applied to transformed data, SparCC which accounts for compositionality through an iterative approach, and the maximal information coefficient (MIC) [1] [5]. Conditional dependence methods, such as graphical probabilistic models and the SPRING (Semi-Parametric Rank-based approach for INference in Graphical model) algorithm, estimate partial correlations to distinguish direct from indirect associations [5]. Proportionality measures offer another compositionality-aware alternative specifically designed for relative abundance data [5].

Following association estimation, sparsification transforms the dense association matrix into a meaningful network by selecting statistically significant edges. Approaches include simple thresholding, statistical testing (Student's t-test or permutation tests), or stability selection methods like StARS (Stability Approach to Regularization Selection) which identifies edges that persist across data subsamples [5]. The sparse associations are then transformed into dissimilarities and subsequently into similarities that serve as edge weights in the final network [5]. The entire workflow can be implemented using various software packages and computational tools, with popular choices including SPIEC-EASI, SPRING, and NetCoMi in R [5].

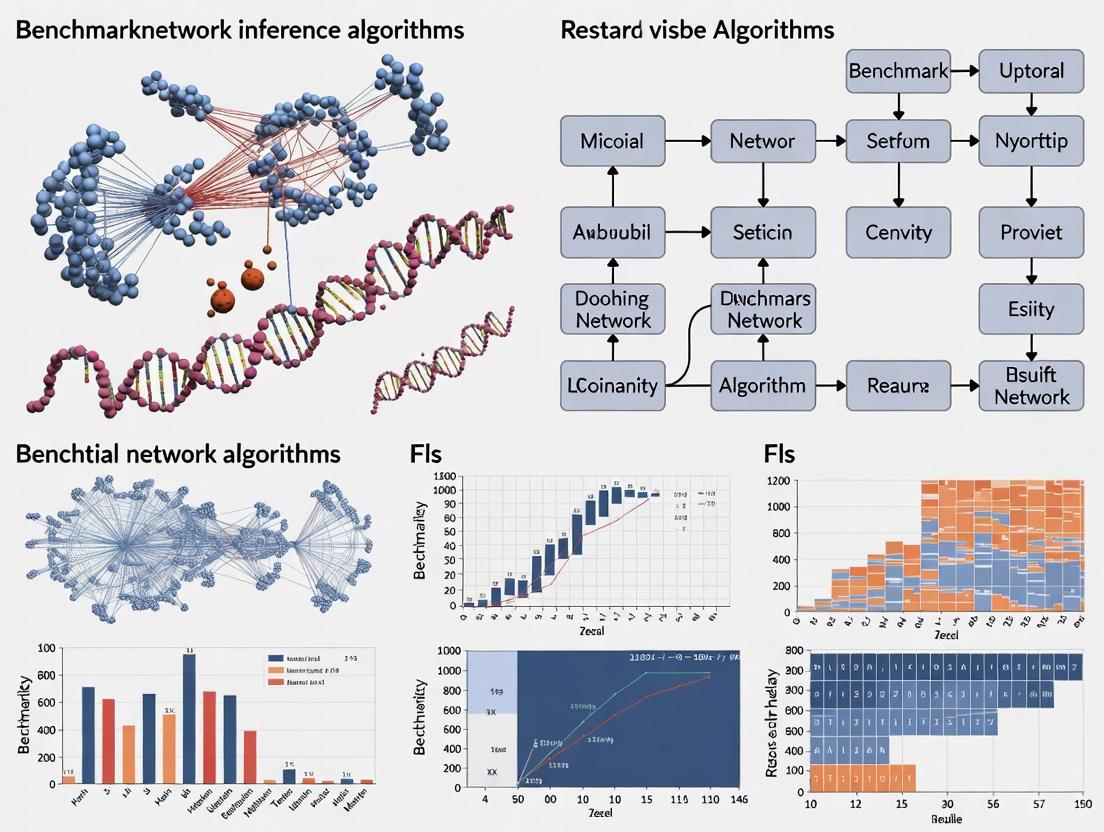

Diagram 1: Microbial network construction workflow showing key steps from raw data to final network.

Analytical Framework for Network Interpretation

Topological Properties and Ecological Interpretation

The interpretation of microbial co-occurrence networks relies heavily on analyzing their topological properties, which can be categorized into global network metrics and local node-level characteristics. Key global metrics include modularity, which quantifies how strongly taxa are compartmentalized into interconnected subgroups (modules), with higher modularity often associated with greater stability as disturbances are contained within modules [3]. The average path length represents the mean shortest distance between all node pairs, indicating overall network efficiency and connectivity [6] [7]. The clustering coefficient measures the degree to which nodes tend to cluster together, forming tightly interconnected groups [7]. The ratio of negative to positive interactions has been proposed as a stability indicator, with communities exhibiting higher proportions of negative interactions potentially being more resistant to perturbation [3].

At the node level, several centrality measures identify taxa with potentially important ecological roles. The degree of a node counts its number of connections, with highly connected "hub" taxa potentially playing stabilizing roles in the community [3] [7]. Betweenness centrality identifies nodes that lie on many shortest paths between other nodes, serving as critical connectors between network modules [6] [7]. Closeness centrality measures how quickly a node can reach all other nodes in the network, indicating potential influence spread [7]. Research on anaerobic digestion systems has demonstrated that lower-abundance genera (as low as 0.1%) can perform central hub roles, highlighting the importance of considering rare taxa in network analyses [6].

Table 2: Key Topological Metrics for Network Analysis

| Metric | Definition | Ecological Interpretation | Measurement Level |

|---|---|---|---|

| Modularity | Degree to which network is organized into densely connected subgroups | Compartmentalization of ecological niches; higher values may indicate stability | Global |

| Average Path Length | Mean shortest distance between all node pairs | Efficiency of potential communication or influence through network | Global |

| Clustering Coefficient | Degree of node clustering into interconnected triangles | Resilience through redundant connections; local stability | Global/Local |

| Degree | Number of connections a node has | Taxon connectivity; hub status indicates potential importance | Local |

| Betweenness Centrality | Number of shortest paths passing through a node | Connector role between modules; potential information flow control | Local |

| Closeness Centrality | Average distance of a node to all other nodes | Potential for rapid influence spread throughout community | Local |

Experimental Validation of Inferred Interactions

A critical challenge in microbial co-occurrence network analysis lies in validating computationally inferred interactions through experimental approaches. Generalized Lotka-Volterra (gLV) modeling provides one framework for validation by simulating multi-species microbial communities with known interaction patterns and comparing these with empirically derived co-occurrence networks [7]. These simulations have revealed that co-occurrence networks can recapitulate underlying interaction networks under certain conditions but lose interpretability when habitat filtering effects dominate [7]. Such modeling approaches have identified that networks may contain "hot spots" of spurious correlation around hub species that engage in many interactions [7].

More recent advancements include computational frameworks like MBPert, which leverages machine learning optimization with modified gLV formulations to infer species interactions from perturbation and time-series data [8]. This approach uses numerical solutions of differential equations and iterative parameter estimation to robustly capture microbial dynamics, outperforming traditional gradient matching methods [8]. When applied to Clostridium difficile infection in mice and human gut microbiota subjected to antibiotic perturbations, MBPert accurately recapitulated species interactions and predicted system dynamics [8]. Such methods generate directed, signed, and weighted interaction networks that potentially encode causal mechanisms, offering significant advantages over simple correlation-based networks [8].

Benchmarking Network Inference Approaches

Performance Comparison of Association Measures

The benchmarking of microbial network inference methods requires standardized evaluation metrics and datasets. Performance is typically assessed using sensitivity (true positive rate) and specificity (true negative rate) in detecting known interactions, particularly when using simulated communities with predefined interaction structures [7]. Different association measures demonstrate variable performance under distinct ecological scenarios and data characteristics. Correlation-based methods like Spearman and Pearson correlations are computationally efficient but susceptible to compositional effects and spurious correlations [1] [3]. Compositionally-aware methods like SparCC and SPIEC-EASI specifically address the compositional nature of microbiome data but may have higher computational demands [1] [5]. Conditional dependence methods like graphical lasso and SPRING can distinguish direct from indirect associations but require careful parameter tuning and stability selection [5].

Simulation studies using gLV models have provided crucial insights into methodological performance. These investigations reveal that the accuracy of co-occurrence networks in capturing true interactions depends heavily on sampling breadth (number of samples), community diversity, and interaction structure [7]. Networks inferred from limited sample sizes show reduced sensitivity and specificity, particularly for detecting negative interactions [7]. The Klemm-Eguiluz model, which generates networks with small-world, scale-free, and modular properties, may best represent real microbial communities and provides a rigorous testbed for method evaluation [7].

Table 3: Comparison of Network Inference Methods

| Method | Underlying Approach | Strengths | Limitations |

|---|---|---|---|

| Pearson/Spearman Correlation | Linear/monotonic association measure | Computational efficiency; intuitive interpretation | Sensitive to compositionality; detects direct and indirect associations |

| SparCC | Compositionally-aware correlation | Accounts for compositional bias; robust to sparse data | Iterative approach computationally intensive for large datasets |

| SPRING | Conditional dependence with compositionality | Distinguishes direct from indirect associations; handles zeros | Requires stability selection; complex parameter tuning |

| SPIEC-EASI | Graphical models with inverse covariance | Compositionally-aware; different sparsity methods available | Computationally intensive; assumes sparse underlying network |

| gLV-based Inference | Dynamical systems modeling | Captures causal interactions; predicts perturbation response | Requires time-series or perturbation data; computationally complex |

Impact of Data Preprocessing on Inference Accuracy

Data preprocessing decisions significantly impact network inference outcomes, creating substantial variability in results across studies. Rarefaction remains controversial, with some studies demonstrating it decreases precision for correlation-based methods while others find minimal impact when using compositionally-robust association measures [1]. Prevalence filtering thresholds represent a critical parameter, with more stringent filters (e.g., >20% prevalence) reducing false positives but potentially excluding ecologically important rare taxa [1] [4]. Research on anaerobic digestion systems has revealed that taxa with abundances as low as 0.1% can serve as network hubs, highlighting the potential consequences of aggressive filtering [6].

The challenge of zero inflation requires special consideration, as matching zeros across samples can create artificially strong associations between rarely detected taxa [4]. Some association measures like Bray-Curtis dissimilarity are designed to ignore matching zeros, but still require sufficient nonzero value pairs for reliable association estimation [4]. Recent methodological developments provide formulas to determine the maximum number of zeros above which meaningful association testing becomes impossible, offering more principled guidance for data filtering [4]. Additionally, batch effects and technical variability introduced during sample collection, DNA extraction, and sequencing can create spurious associations if not properly accounted for in the analysis pipeline [3].

Diagram 2: Distinguishing direct microbial interactions from environment-induced correlations.

Applications and Case Studies in Microbial Ecology

Environmental and Engineered Systems

Microbial co-occurrence network analysis has yielded significant insights into the structure and function of environmental and engineered microbial ecosystems. In anaerobic digestion systems, network topological properties have been linked to reactor parameters and process performance [6]. Specifically, hydrolysis efficiency correlated positively with clustering coefficient and negatively with normalized betweenness, while the influent particulate COD ratio and relative differential hydrolysis-methanogenesis efficiency correlated negatively with average path length [6]. These findings demonstrate how network topology can serve as a bioindicator for system functional status. Furthermore, thermophilic digestion networks contained more connector genera, suggesting stronger inter-module communication under high-temperature conditions [6].

In soil ecosystems, co-occurrence networks have been applied across geographic scales from single aggregates to planetary-level surveys, revealing how abiotic and biotic factors determine community structure [9]. These analyses have identified keystone taxa and their relationships to specific soil functions, while also inferring mechanisms of community assembly [9]. However, soil network studies face particular challenges including high spatial heterogeneity, strong environmental filtering, and diverse microbial functional guilds that complicate interpretation [9]. Researchers have cautioned against the uncritical application of network analysis without proper hypothesis testing or validation [9].

Host-Associated Microbiomes

In host-associated contexts, co-occurrence network analysis has revealed how microbial interactions contribute to health and disease states. In the human gut, healthy microbiota typically exhibit higher connectivity and stability, while dysbiotic states often show disrupted network topology with reduced inter-species associations [3] [8]. For example, colorectal cancer patients exhibit gut microbiomes with fewer microbe-microbe associations, suggesting that network disintegration may accompany disease progression [8]. These topological differences provide insights beyond simple compositional changes, potentially revealing functional disruptions in microbial community organization.

Network analysis has also proven valuable in predicting responses to perturbations such as antibiotic treatments or dietary interventions [8]. Studies of repeated ciprofloxacin exposure on human gut microbiota revealed how network topology shifts during and after antibiotic perturbation, identifying which species interactions are most resilient to disturbance [8]. Similarly, analysis of Clostridium difficile infection in gnotobiotic mice demonstrated how network approaches can identify potential bacteriotherapy targets by modeling species interactions and community dynamics [8]. These applications highlight the translational potential of microbial network analysis in clinical settings.

Challenges and Future Perspectives

Methodological Limitations and Interpretation Caveats

Despite their utility, microbial co-occurrence networks face several methodological challenges that limit interpretability. A fundamental issue concerns the ecological meaning of edges, which are often interpreted as direct biotic interactions but may instead reflect shared environmental preferences, habitat filtering, or common responses to unmeasured variables [4] [9]. The problem of environmental confounding is particularly pronounced in heterogeneous sample sets where microbial distributions are strongly influenced by abiotic factors [4]. Strategies to address this include incorporating environmental factors as additional nodes in networks, stratifying samples into more homogeneous groups, or statistically regressing out environmental effects before network construction [4].

The challenge of higher-order interactions (HOIs) presents another complexity, where the relationship between two species is modified by the presence of a third species [4]. Most network approaches focus exclusively on pairwise associations, potentially missing these important multi-species effects [4]. Additionally, the sampling resolution and spatial heterogeneity of microbial communities can significantly impact network inference, as samples that aggregate distinct microhabitats may obscure fine-scale interaction patterns [4]. Finally, the distinction between correlation and causation remains problematic, with some researchers advocating for dynamical modeling approaches or careful experimental design to establish causal relationships [8] [7].

Emerging Methodological Frontiers

Several promising approaches are emerging to address current limitations in microbial network inference. Dynamical systems modeling using tools like MBPert leverages time-series and perturbation data to infer directed, signed interaction networks that potentially encode causal mechanisms [8]. These methods combine generalized Lotka-Volterra equations with machine learning optimization to predict system dynamics under novel conditions [8]. Multi-omic integration represents another frontier, where networks simultaneously incorporate taxonomic, functional, metabolomic, and environmental data to provide more comprehensive ecological insights [3]. Such integrated approaches can connect taxonomic co-occurrence patterns with underlying metabolic processes and ecosystem functions.

Control theory frameworks are being developed to identify minimal sets of "driver" species that can steer microbial communities toward desired states, with applications in ecosystem restoration, bioremediation, and clinical interventions [8]. These approaches leverage network topology to predict which species manipulations will most effectively influence community structure and function [8]. Finally, standardized benchmarking initiatives using simulated communities with known interaction networks are providing rigorous evaluation of inference methods across diverse ecological scenarios [7]. These efforts establish best practices and performance standards for the field, addressing current concerns about reproducibility and validation [1] [9].

Table 4: Key Research Reagents and Computational Tools for Microbial Network Analysis

| Resource | Type | Primary Function | Application Context |

|---|---|---|---|

| 16S rRNA Sequencing | Laboratory method | Taxonomic profiling of bacterial/archaeal communities | Initial community characterization; node identity definition |

| Shotgun Metagenomics | Laboratory method | Whole-community sequencing for taxonomic and functional profiling | Enhanced taxonomic resolution; functional network construction |

| SPRING Package | Computational tool | Conditional dependency network inference for compositional data | Construction of sparse microbial association networks |

| SpiecEasi | Computational tool | Compositionally-aware network inference via graphical models | Microbial interaction network inference from abundance data |

| igraph | Computational tool | Network analysis and visualization | Calculation of topological metrics; network visualization |

| NetCoMi | Computational tool | Comprehensive network construction and comparison | Multi-group network analysis; differential network topology |

| gLV Models | Mathematical framework | Dynamical modeling of species interactions | Validation of inferred interactions; prediction of perturbation effects |

| Centered Log-Ratio Transformation | Statistical method | Compositional data transformation for Euclidean space | Data normalization before correlation analysis |

Microbial co-occurrence networks represent a powerful methodological framework for extracting ecological insights from complex microbiome datasets. When constructed and interpreted with appropriate attention to methodological limitations, these networks reveal organizational principles of microbial communities that remain hidden from purely compositional analyses. The continuing development of more sophisticated inference algorithms, validation frameworks, and multi-omic integration approaches promises to enhance the reliability and biological relevance of microbial network analysis. As standardized benchmarking and experimental validation become more widespread, microbial co-occurrence networks will increasingly fulfill their potential as tools for predicting ecosystem dynamics, identifying intervention targets, and advancing our fundamental understanding of microbial community assembly and function.

Microbial communities are complex ecosystems where numerous species interact through intricate networks that fundamentally influence human health and disease. The structure and dynamics of these interaction networks play a critical role in host metabolism, immune function, and physiological homeostasis [10] [11]. Disruptions in these microbial networks—known as dysbiosis—have been implicated in a wide spectrum of conditions, including inflammatory bowel disease (IBD), neurological disorders, skin diseases, and various cancers [10]. Consequently, accurately inferring and modeling these microbial interactions has emerged as a pivotal challenge in biomedical research, with significant implications for developing novel diagnostics and therapeutics.

The field faces substantial methodological challenges due to the inherent complexity of microbial ecosystems. Microbial data is typically sparse, compositional, and high-dimensional, with far more microbial taxa than samples in most studies [12] [13]. Additionally, microbial interactions are dynamic, changing over time and in response to environmental perturbations, dietary interventions, or medical treatments [13] [11]. Traditional correlation-based approaches often fail to distinguish direct from indirect associations and cannot capture the conditional dependencies that characterize true ecological interactions [13] [14].

This comparison guide provides a systematic benchmarking of contemporary computational frameworks for microbial network inference, with particular emphasis on their applicability to biomedical research. We evaluate algorithmic performance across multiple dimensions—including accuracy, scalability, temporal modeling capability, and biological relevance—to equip researchers with evidence-based criteria for method selection in drug development and mechanistic studies of host-microbe interactions.

Benchmarking Microbial Network Inference Algorithms

Comparative Performance Analysis

Table 1: Performance Benchmarking of Network Inference Algorithms

| Algorithm | Underlying Methodology | Temporal Modeling | Key Strengths | Prediction Accuracy (Bray-Curtis) | Optimal Application Context |

|---|---|---|---|---|---|

| Graph Neural Networks [15] | Graph convolutional networks with temporal convolution | Multi-step future prediction (2-8 months) | Captures relational dependencies between species; Excellent for long-term forecasting | High (good to very good accuracy across 24 WWTPs) | Longitudinal studies requiring long-term predictions; Systems with complex microbial interdependencies |

| LUPINE [13] | Partial least squares regression with conditional independence | Sequential time-point modeling using past information | Handles small sample sizes and time points; Captures dynamic interactions evolving over time | Validated on 4 case studies with relevant taxon identification | Intervention studies with limited time points; Mouse and human longitudinal studies |

| coralME [16] | Genome-scale metabolic modeling (ME-models) | Not inherently temporal, but can simulate responses | Links microbial genomes to phenotypic attributes; Predicts metabolic responses to nutrients | Identified gut chemistry shifts in IBD patients | Personalized nutrition interventions; Understanding metabolic basis of disease |

| fuser [12] | Fused lasso with cross-environment learning | Spatial and temporal dynamics across niches | Preserves niche-specific signals while sharing information across environments; Reduces false positives/negatives | Comparable to glmnet in homogeneous settings, superior in cross-habitat prediction | Multi-environment studies; Systems with distinct ecological niches |

| SparCC/SpiecEasi [13] | Correlation/partial correlation-based approaches | Single time-point only | Compositional data awareness; Established benchmarks for cross-sectional studies | Limited in longitudinal settings | Initial exploratory analysis of cross-sectional data |

Experimental Protocols and Methodologies

The graph neural network approach employs a structured workflow for predicting microbial community dynamics:

- Data Acquisition and Preprocessing: Collect longitudinal 16S rRNA amplicon sequencing data (2-5 times per month over 3-8 years). Classify Amplicon Sequence Variants (ASVs) using ecosystem-specific taxonomic databases.

- Feature Selection: Select the top 200 most abundant ASVs (representing >50% of sequence reads) to focus on ecologically significant taxa.

- Pre-clustering: Implement four clustering methods (biological function, IDEC algorithm, graph network interaction strengths, and ranked abundances) to group ASVs into clusters of five for model training.

- Model Architecture:

- Graph convolution layer: Learns interaction strengths and extracts relational features between ASVs

- Temporal convolution layer: Extracts temporal patterns across time series data

- Output layer: Fully connected neural networks predict future relative abundances

- Training Regimen: Use moving windows of 10 consecutive historical samples to predict the next 10 time points (2-4 months forward).

- Validation: Perform chronological 3-way split of data into training, validation, and test sets. Evaluate using Bray-Curtis dissimilarity, mean absolute error, and mean squared error metrics.

The LUPINE methodology specializes in longitudinal microbiome analysis with three distinct modeling approaches:

- Single Time Point Modeling:

- For taxa pair (i,j), compute partial correlation while controlling for other taxa

- Use first principal component of X^-(i,j) (all taxa except i and j) as one-dimensional approximation

- Addresses high-dimensionality (p >> n) challenge through dimensionality reduction

Two Time Point Modeling:

- Employ Projection to Latent Structures (PLS) regression to maximize covariance between current and preceding time point datasets

- Extract latent components that capture temporal dependencies

Multiple Time Point Modeling:

- Implement blockPLS for datasets with several previous time points

- Maximize covariance between current and all past time point data

- Sequentially incorporate historical information to model evolving interactions

The framework assumes individuals within a specific group share a common network structure at each time point, enabling group-specific analyses for control versus intervention studies.

The coralME workflow generates genome-scale models to predict metabolic interactions:

- Model Generation: Automatically construct ME-models (Metabolism and Expression models) that link microbial genomes to phenotypic attributes using large-scale genetic data.

- Nutrient Response Simulation: Input different dietary conditions (e.g., low-iron, low-zinc, or high-macronutrient diets) to predict how they affect microbial abundances and metabolic output.

- Disease State Integration: Incorporate microbial expression data from patients (e.g., IBD patients) to reveal real-time metabolic activities in disease contexts.

- Interaction Mapping: Identify specific cross-feeding relationships, nutrient competition, and metabolic cooperation between microbial taxa.

- Therapeutic Prediction: Simulate how prebiotics, probiotics, or dietary interventions might restore beneficial microbial functions.

The fuser algorithm implements a novel approach for multi-environment network inference:

- Data Collection: Gather microbiome abundance data from multiple environments (soil, aquatic, host-associated) with different ecological conditions.

- Preprocessing Pipeline:

- Apply log10 transformation with pseudocount (log10(x+1)) to raw OTU counts

- Standardize group sizes by calculating mean group size and randomly subsampling

- Remove low-prevalence OTUs to reduce sparsity

- Ensure equal sample numbers per experimental group

- Same-All Cross-validation (SAC):

- "Same" regime: Train and test within the same environmental niche

- "All" regime: Train on combined data from multiple environmental niches

- Fused Lasso Implementation: Retain subsample-specific signals while sharing relevant information across environments during training, generating distinct environment-specific predictive networks.

- Performance Evaluation: Compare test errors against baseline algorithms (e.g., glmnet) in both Same and All scenarios to assess cross-environment predictive robustness.

Visualization of Methodologies and Workflows

Graph Neural Network Architecture for Microbial Prediction

LUPINE Longitudinal Modeling Framework

Table 2: Essential Research Resources for Microbial Interaction Studies

| Resource Category | Specific Tools/Techniques | Application in Microbial Network Research |

|---|---|---|

| Sequencing Technologies | 16S rRNA amplicon sequencing, Shotgun metagenomics | Profiling microbial community structure at species/strain level; Functional potential assessment [10] |

| DNA Extraction Methods | Mechanical lysis, Trypsin digestion, Saponin-based differential lysis | Minimizing human DNA contamination in tissue samples; Optimizing microbial DNA yield [10] |

| Reference Databases | MiDAS 4 ecosystem-specific database [15] | High-resolution taxonomic classification of ASVs in specific environments |

| Computational Frameworks | coralME, fuser, LUPINE, glmnet | Generating predictive models; Inferring microbial interactions from abundance data [16] [12] [13] |

| Validation Approaches | Same-All Cross-validation (SAC) [12], Mono- and co-culture experiments [11] | Assessing algorithm performance; Establishing ground truth for microbial interactions |

| Data Types | Longitudinal abundance data, Environmental parameters, Metabolic profiles | Training and testing predictive models; Understanding context-dependency of interactions [15] [10] |

The accurate inference of microbial interaction networks represents a cornerstone for advancing our understanding of the microbiome's role in human health and disease. This benchmarking analysis demonstrates that method selection should be guided by specific research objectives: graph neural networks excel in long-term temporal forecasting, LUPINE offers robust performance in intervention studies with limited time points, coralME provides unparalleled insights into metabolic mechanisms, and fuser demonstrates superior performance in cross-environment predictions. While correlation-based methods like SparCC and SpiecEasi remain valuable for initial exploratory analyses, the field is rapidly advancing toward more sophisticated, dynamic modeling approaches that can capture the temporal and contextual complexity of microbial communities.

Future developments should prioritize the integration of spatial considerations, standardized benchmarking datasets, and improved validation through experimental microbiology. As these computational methods mature, they will increasingly enable researchers to translate microbial ecology principles into targeted therapeutic strategies for modulating the microbiome to treat disease—ushering in a new era of microbiome-based medicine. The ongoing standardization of methods and development of comprehensive interaction databases will be crucial for realizing the full potential of microbial network inference in biomedical research and therapeutic development.

Inferring microbial interaction networks from abundance data is a cornerstone of modern microbiome research, promising insights into community stability, dysbiosis, and ecological drivers [13]. This capability is particularly vital for drug development professionals exploring microbiome-disease interactions and seeking novel therapeutic targets [17] [18]. However, the field has become an algorithmic jungle—a dense and confusing landscape of diverse methods including correlation-based approaches, partial correlation methods, and modern machine learning algorithms [12] [13]. Each claims superiority, yet researchers face a critical problem: without standardized, rigorous benchmarking, determining which algorithm will produce reliable, biologically plausible inferences for their specific experimental context is nearly impossible.

The fundamental challenge stems from the inherent complexity of microbiome data itself, which is typically sparse, compositional, and high-dimensional [13]. Different algorithms make different statistical assumptions to handle these properties, but their performance varies dramatically across data types, sample sizes, and ecological contexts [12]. For instance, a method optimized for large, cross-sectional human gut microbiome data may perform poorly when applied to a longitudinal study with few time points or to a low-diversity environmental sample [13]. This inconsistency threatens the validity of biological conclusions and hinders translational applications in drug discovery [19].

This article demonstrates that implementing systematic benchmarking frameworks is not an academic exercise but a practical necessity. Through comparative analysis of contemporary algorithms and the introduction of standardized evaluation protocols, we provide researchers with the evidence-based toolkit needed to navigate the algorithmic jungle and achieve reliable microbial network inference.

Landscape of Microbial Network Inference Algorithms

The field of microbial network inference has evolved from simple correlation-based methods to sophisticated algorithms designed to handle the specific challenges of microbiome data. Current methods can be broadly categorized by their underlying mathematical approaches and their applicability to different experimental designs, particularly the growing importance of longitudinal studies.

Table 1: Key Microbial Network Inference Algorithms and Their Characteristics

| Algorithm | Underlying Method | Data Type | Key Strength | Primary Limitation |

|---|---|---|---|---|

| LUPINE [13] | Partial Least Squares Regression & Conditional Independence | Longitudinal | Infers dynamic networks across time points; suitable for small samples and few time points | Group-level inference only; no individual-level networks |

| LUPINE_single [13] | Principal Component Analysis & Conditional Independence | Cross-Sectional | Handles high-dimensional data (p > n) using one-dimensional approximation | Designed for single time point analysis only |

| fuser [12] | Fused Lasso Regression | Grouped Multi-Environment | Shares information across habitats while preserving niche-specific edges | Requires grouped sample structure for optimal performance |

| SparCC [13] | Correlation with Compositional Correction | Cross-Sectional | Accounts for compositional nature of microbiome data | Only captures correlations, not conditional dependencies |

| SpiecEasi [13] | Partial Correlation / Graphical Models | Cross-Sectional | Infers direct associations via conditional independence | Computationally intensive for very large taxon sets |

| glmnet [12] | Lasso Regression | General Purpose | Well-established general-purpose regularization | Assumes uniform parameters across environments |

The emergence of specialized algorithms like LUPINE for longitudinal data represents a significant advancement. Traditional approaches that assume static interactions become limiting when studying microbial dynamics in response to interventions, such as dietary changes or antibiotic treatments [13]. LUPINE addresses this by sequentially incorporating information from all previous time points using projection to latent structures (PLS) regression, enabling it to model how microbial interactions evolve over time [13].

For studies comparing multiple environments or experimental conditions, fuser introduces a novel approach that avoids the false consensus of fully pooled models and the false specificity of completely independent models [12]. By using fused lasso regularization, it shares information between related environments (e.g., similar soil types or body sites) while still allowing for environment-specific interactions, thereby improving cross-environment prediction accuracy [12].

Experimental Benchmarking: Framework and Comparative Performance

The Same-All Cross-Validation (SAC) Framework

Rigorous algorithm evaluation requires specialized cross-validation frameworks that reflect real-world research questions. The Same-All Cross-validation (SAC) framework, adapted from Hocking et al. (2024), tests algorithms in two critical scenarios [12]:

- Same Scenario: Training and testing within the same environmental niche or experimental group to assess within-habitat performance.

- All Scenario: Training on combined data from multiple environments and testing on individual environments to evaluate cross-environment generalization.

This framework is particularly valuable because it mirrors two common research contexts: studying a single, well-defined microbiome habitat versus conducting meta-analyses across multiple related environments [12]. The SAC protocol begins with standardized data preprocessing, including log-transformation of OTU counts with pseudocount addition, group size standardization through balanced subsampling, and filtering of low-prevalence OTUs to reduce sparsity [12].

SAC Framework Workflow

Quantitative Performance Comparison

Applying the SAC framework to benchmark current algorithms reveals distinct performance patterns. The following table summarizes results from benchmarking studies conducted on publicly available microbiome datasets, including the Human Microbiome Project (HMP), MovingPictures, and specialized soil microbiome data [12] [13].

Table 2: Algorithm Performance Benchmarking Across Multiple Datasets

| Algorithm | Same Scenario Test Error | All Scenario Test Error | Longitudinal Data Accuracy | Small Sample Performance |

|---|---|---|---|---|

| fuser | Comparable to glmnet | Reduced by 15-30% vs. baselines | Not Specifically Designed | Not Specifically Optimized |

| LUPINE | Not Applicable | Not Applicable | Superior to single time point methods | Excellent (n < 50) |

| LUPINE_single | High with cross-sectional data | N/A | Less accurate than LUPINE | Excellent (p > n) |

| SpiecEasi | Moderate to High | Varies | Static networks only | Moderate |

| glmnet | Low (benchmark) | Increased vs. Same scenario | Static networks only | Poor with high dimensionality |

The benchmarking data demonstrates that fuser significantly outperforms conventional approaches like glmnet in cross-environment prediction, reducing test error by 15-30% in All scenarios while maintaining comparable performance within homogeneous environments [12]. This makes it particularly valuable for studies analyzing microbiome communities across multiple related habitats or experimental conditions.

For longitudinal studies, LUPINE shows distinct advantages over single time point methods. In case studies tracking microbiome responses to interventions, LUPINE successfully identified dynamically changing taxa interactions that were obscured by static network methods [13]. Its ability to handle small sample sizes (n < 50) makes it particularly suitable for expensive or difficult longitudinal studies with limited sampling points [13].

Essential Research Reagent Solutions for Microbial Network Inference

Implementing robust benchmarking requires specific computational tools and resources. The following table details key "research reagents" - datasets, software, and validation frameworks - essential for state-of-the-art microbial network inference studies.

Table 3: Research Reagent Solutions for Network Inference Benchmarking

| Reagent / Resource | Type | Function in Benchmarking | Key Features |

|---|---|---|---|

| SAC Framework [12] | Validation Protocol | Evaluates within and cross-habitat prediction accuracy | Standardized comparison of algorithm generalizability |

| fuser R Package [12] | Software Algorithm | Infers environment-specific networks with information sharing | Fused lasso implementation for multi-environment data |

| LUPINE R Code [13] | Software Algorithm | Infers dynamic networks from longitudinal data | PLS regression for temporal data; handles small sample sizes |

| HMP Dataset [12] | Reference Data | Provides standardized human microbiome data for benchmarking | 3,285 samples, 5,830 taxa across multiple body sites |

| MovingPictures Dataset [12] | Longitudinal Data | Enables testing of temporal network inference algorithms | 1,967 samples across 4 body sites with temporal dynamics |

| Preprocessed Necromass Data [12] | Specialized Dataset | Tests algorithms on simple, controlled communities | 36 taxa, 69 samples with known treatment conditions |

Methodological Protocols for Reproducible Benchmarking

Implementing the SAC Validation Framework

The SAC framework requires specific methodological steps to ensure reproducible benchmarking [12]:

Data Preprocessing Pipeline: Apply log10(x+1) transformation to raw OTU counts, standardize group sizes by subsampling to the smallest group size, and remove low-prevalence OTUs (typically those appearing in <10% of samples).

Stratified Fold Creation: For "Same" scenario, perform standard k-fold cross-validation within each environment. For "All" scenario, create folds that combine data from all environments while maintaining proportional representation.

Network Inference and Evaluation: Train each algorithm on the training folds and compute test error on held-out samples using appropriate metrics (e.g., mean squared error for association strength, precision-recall for edge detection).

Statistical Comparison: Use paired statistical tests to compare algorithm performance across multiple datasets and folds, accounting for multiple comparisons.

Benchmarking Methodology Workflow

Critical Analysis of Benchmarking Results

The benchmarking data reveals several critical insights for researchers and drug development professionals. First, no single algorithm dominates all scenarios—method selection must be driven by experimental design and research questions. For cross-sectional studies of single environments, established methods like SpiecEasi and LUPINE_single provide robust inference, while longitudinal designs require specialized approaches like LUPINE [13].

Second, algorithm performance is context-dependent. Fuser excels in multi-environment studies but offers no advantage for single-habitat analysis [12]. Similarly, LUPINE's strength with small sample sizes makes it ideal for intervention studies with limited sampling points, but its group-level inference may miss important individual variations [13].

Third, benchmarking against biologically relevant outcomes is essential. While predictive accuracy on held-out data is important, the ultimate validation comes from biological plausibility—whether inferred networks recapitulate known ecological relationships or generate testable hypotheses about microbial interactions [12] [13].

The expanding diversity of microbial network inference algorithms represents both opportunity and challenge for microbiome researchers and drug development professionals. While no universal best method exists, systematic benchmarking using frameworks like SAC provides the compass needed to navigate this complex landscape. The evidence clearly demonstrates that algorithm performance is highly context-dependent, with methods like fuser excelling in multi-environment studies and LUPINE providing unique capabilities for longitudinal designs with small sample sizes.

For research aiming to translate microbiome insights into therapeutic discoveries, embracing these benchmarking practices is not optional—it is fundamental to producing reliable, reproducible biological insights. By selecting algorithms matched to their specific experimental contexts through rigorous validation, researchers can escape the algorithmic jungle and build a more robust understanding of microbial community dynamics, accelerating the development of microbiome-based therapeutics.

The accurate inference of microbial ecological networks from high-throughput sequencing data is a cornerstone of modern microbiome research. Such networks provide crucial insights into microbial community dynamics, stability, and functional relationships, with direct applications in therapeutic development and ecological management. However, the path from raw data to reliable networks is fraught with statistical challenges. Three fundamental concepts—sparsity, compositionality, and ground truth—critically shape the evaluation and benchmarking of network inference algorithms. Sparsity reflects the reality that most species do not interact, compositionality acknowledges that sequencing data reveals relative rather than absolute abundances, and ground truth represents the known interactions against which algorithms are validated. This guide examines how these conceptual frameworks influence the design and interpretation of benchmarks for microbial network inference, providing researchers and drug development professionals with a structured comparison of methodological approaches and their performance under controlled conditions.

Core Conceptual Challenges in Network Inference

The Sparsity Principle in Microbial Networks

Microbial ecological networks are inherently sparse, meaning that any single microorganism interacts with only a small fraction of other community members. This sparsity arises from niche specialization and functional redundancy within communities. From an analytical perspective, sparsity presents both a challenge and an opportunity: it complicates the detection of true interactions against a background of noise but provides a statistical constraint that can improve inference accuracy. Methods that incorporate sparsity constraints through regularization techniques like LASSO or sparse regression models explicitly leverage this principle to reduce false positive rates. In benchmarking contexts, failing to account for network sparsity can lead to overly dense, inaccurate network reconstructions that misrepresent true ecological relationships.

The Compositionality Problem

Microbiome data is fundamentally compositional because sequencing instruments yield relative abundances that sum to a constant total (e.g., proportions of reads per taxon) rather than absolute cell counts. This compositionality creates analytical challenges where correlations between relative abundances may not reflect true biological interactions but rather artifacts of the data structure. Spurious correlations can emerge from the closure effect, where an increase in one taxon's proportion necessarily causes decreases in others'. Proper handling of compositionality is therefore critical for accurate network inference. Benchmarking studies must evaluate how different methods control for these compositionality effects, typically through data transformations like centered log-ratio (CLR) or isometric log-ratio (ILR) transformations, or through models specifically designed for compositional data [20].

The Ground Truth Dilemma

Establishing reliable ground truth—known microbial interactions for validating inference algorithms—represents perhaps the most significant challenge in network benchmarking. Unlike some biological domains where true interactions can be definitively established through controlled experiments, comprehensive ground truth for complex microbial communities is rarely available. Limited validation data can be derived from cultured model systems, targeted experiments, or established metabolic partnerships, but these represent only a tiny fraction of interactions in natural communities. Consequently, benchmarking often relies on simulated datasets where interactions are predefined, creating a tension between biological realism and methodological validation. The quality and realism of ground truth data directly impacts the practical relevance of benchmarking conclusions, necessitating careful interpretation of performance metrics [20].

Experimental Benchmarking Framework

Simulation Design and Data Generation

Comprehensive benchmarking requires realistic simulated data that captures the complex statistical properties of real microbiome datasets while maintaining known ground truth interactions. The Normal to Anything (NORtA) algorithm has emerged as a robust approach for generating such data, as it preserves arbitrary marginal distributions and correlation structures observed in empirical datasets [20]. Realistic simulations should incorporate:

- Distributional Complexity: Real microbiome data exhibits over-dispersion, zero-inflation, and high collinearity between taxa, which must be replicated in simulations to provide meaningful benchmarking.

- Multiple Templates: Using various real datasets as templates ensures method evaluation across diverse data structures, such as the high-dimensional Konzo dataset (1,098 taxa, 1,340 metabolites), intermediate Adenomas dataset (500 taxa, 463 metabolites), and smaller Autism spectrum disorder dataset (322 taxa, 61 metabolites) [20].

- Controlled Association Structures: Introducing known microbe-metabolite relationships with varying strengths and densities allows precise evaluation of inference accuracy.

Method Categories and Evaluation Metrics

Network inference methods can be categorized by their primary analytical approach, each addressing different research questions and data structures. Performance evaluation requires multiple metrics to capture different aspects of inference quality [20]:

Table 1: Method Categories for Microbial Network Inference

| Category | Research Goal | Representative Methods | Key Considerations |

|---|---|---|---|

| Global Association | Detect overall structure | Procrustes Analysis, Mantel Test, MMiRKAT | Provides general assessment before detailed analysis |

| Data Summarization | Identify major patterns | CCA, PLS, RDA, MOFA2 | Reduces dimensionality but may miss specific interactions |

| Individual Associations | Detect pairwise relationships | Correlation measures, Regression models | Faces multiple testing challenges; requires careful correction |

| Feature Selection | Identify most relevant features | LASSO, sCCA, sPLS | Addresses multicollinearity; provides sparse solutions |

Table 2: Performance Metrics for Network Inference Benchmarking

| Performance Dimension | Key Metrics | Interpretation |

|---|---|---|

| Global Association Detection | Statistical power, Type-I error control | Ability to detect overall structure while minimizing false positives |

| Data Summarization Quality | Variance explained, Shared components identified | Effectiveness in capturing and explaining shared variance |

| Individual Association Accuracy | Sensitivity, Specificity, Precision | Accuracy in detecting true pairwise relationships |

| Feature Selection Stability | Feature stability, Non-redundancy | Consistency in identifying relevant features across datasets |

Comparative Performance Analysis

Quantitative Benchmarking Results

Recent systematic benchmarking of nineteen integrative methods across multiple simulated scenarios reveals distinct performance patterns. Methods were evaluated under realistic conditions mirroring the complex properties of microbiome-metabolome data, with specific attention to their handling of sparsity, compositionality, and varying data dimensions [20].

Table 3: Method Performance Across Different Data Scenarios

| Method Category | High-Dimensional Data | Intermediate Dimensions | Small Sample Size | Compositionality Handling |

|---|---|---|---|---|

| Global Association | Moderate power | High power | Low power | Varies by transformation |

| Data Summarization | Good performance | Best performance | Limited utility | Good with CLR/ILR |

| Individual Associations | High false positives | Moderate accuracy | Low reliability | Dependent on transformation |

| Feature Selection | Best performance | Good performance | Variable performance | Excellent with proper normalization |

Transformation Impact on Inference Accuracy

The choice of data transformation significantly impacts method performance, particularly for addressing compositionality. Common approaches include:

- Centered Log-Ratio (CLR): Transforms relative abundances using a logarithmic function centered around the geometric mean, helping address compositionality but requiring careful handling of zeros.

- Isometric Log-Ratio (ILR): Uses orthonormal basis functions to transform compositional data to Euclidean space, better preserving metric properties but requiring more complex implementation.

- Alpha Transformation: Applies a power transformation to reduce skewness before further analysis, often combined with other approaches.

Methods that explicitly incorporate compositional transformations (CLR, ILR) generally outperform those that apply standard statistical methods without such adjustments, particularly for individual association detection and feature selection tasks. The performance advantage is most pronounced in high-dimensional settings with strong compositional effects [20].

Research Reagent Solutions

The implementation of robust network inference benchmarks requires specific analytical tools and computational resources. The following table details key research reagents and their functions in experimental workflows for evaluating microbial network inference algorithms.

Table 4: Essential Research Reagents for Network Inference Benchmarking

| Reagent/Tool | Function | Application Context |

|---|---|---|

| NORtA Algorithm | Generates realistic simulated data with arbitrary marginal distributions and correlation structures | Creating benchmarking datasets with known ground truth [20] |

| SpiecEasi | Estimates microbial association networks using sparse inverse covariance estimation | Constructing correlation networks for simulation templates [20] |

| CLR/ILR Transformations | Addresses compositionality in microbiome data | Data preprocessing to reduce spurious correlations [20] |

| Multi-dimensional Performance Metrics | Evaluates method performance across multiple dimensions | Comprehensive benchmarking beyond single metrics [20] |

| Real Dataset Templates | Provides empirical data structures for simulation | Ensuring simulated data reflects real-world complexity [20] |

The benchmarking of microbial network inference methods must explicitly address the fundamental challenges of sparsity, compositionality, and ground truth to provide meaningful guidance for researchers. Systematic evaluation reveals that no single method performs optimally across all scenarios—the choice of algorithm must be guided by the specific research question, data properties, and analytical goals. Methods incorporating sparsity constraints generally outperform dense solutions, while proper handling of compositionality through appropriate transformations is essential for accurate inference. The continuing development of more realistic simulation frameworks and validation datasets will further enhance benchmarking rigor, ultimately supporting more reliable network inference in microbiome research with significant implications for therapeutic development and ecological management.

The Algorithmic Toolkit: From Correlation to Causal Inference and Real-World Applications

Table of Contents

- Introduction

- Methodological Foundations

- Comparative Analysis of Inference Methods

- Experimental Protocols for Benchmarking

- Research Reagent Solutions

- Conclusion and Future Directions

Understanding the complex interactions within microbial communities is a fundamental goal in microbial ecology and has significant implications for human health, environmental science, and biotechnology. Microbial network inference—the process of predicting associations between microbial taxa from abundance data—serves as a critical tool for visualizing and understanding these complex ecosystems [21]. The field has seen the development of a diverse array of computational algorithms, which can be broadly categorized into methods based on correlation, regression, and graphical models [21] [11]. Each category comes with its own philosophical underpinnings, mathematical assumptions, and performance characteristics.

Benchmarking these algorithms is a non-trivial challenge, as their performance is highly dependent on data characteristics, environmental context, and the specific biological questions being asked [12] [11]. This guide provides an objective comparison of these methodological categories, framing them within the context of contemporary benchmarking research. It synthesizes current experimental data and protocols to equip researchers, scientists, and drug development professionals with the knowledge to select, apply, and validate the most appropriate inference methods for their studies of microbial communities.

Methodological Foundations

At their core, network inference algorithms aim to identify statistically significant associations between the observed abundances of different microbial taxa. The conceptual and mathematical approaches to defining these associations vary significantly between the three main categories.

The logical relationship and typical workflow for selecting and applying these methods can be visualized as follows:

Correlation-based methods quantify the strength and direction of a linear relationship between two variables without implying causality or accounting for the influence of other variables in the community [22] [23]. The result is a symmetric measure of association, leading to undirected network edges. The Pearson correlation coefficient (r) is a classic example, but others like Spearman's rank correlation are also used to capture monotonic nonlinear relationships [21].

Regression-based methods, such as regularized linear models (e.g., LASSO), take a different approach. They express the relationship in the form of an equation, modeling a response variable (e.g., the abundance of one taxon) from an explanatory variable (e.g., the abundance of another) [24] [23]. This framework is more naturally suited to asymmetric, predictive relationships and can control for other factors. The output is a slope coefficient (b) that can be interpreted as an effect size, potentially leading to directed network edges [23].

Graphical Models, particularly Gaussian Graphical Models (GGMs), represent a more advanced approach by inferring conditional dependencies [25]. Instead of simple pairwise correlation, GGMs estimate the association between two taxa after accounting for the abundances of all other taxa in the network. An edge in a GGM implies a direct relationship, which helps to filter out spurious correlations mediated by a third taxon. The core mathematical object is the precision matrix (the inverse of the covariance matrix), where a zero entry indicates conditional independence between two taxa [25].

Comparative Analysis of Inference Methods

A direct comparison of these categories reveals distinct trade-offs between interpretability, computational complexity, and robustness to data artifacts, which are critical for benchmarking.

Table 1: Comparative analysis of microbial network inference methods.

| Feature | Correlation Methods | Regression Methods | Graphical Models |

|---|---|---|---|

| Core Concept | Measures symmetric, pairwise linear or monotonic association [22]. | Models the abundance of one taxon as a function of others; predictive [23]. | Models conditional dependence between taxa given all others in the community [25]. |

| Causality/Direction | No causality; undirected networks [22]. | Can imply directionality (directed networks) but does not prove causality. | Typically undirected, representing direct conditional associations. |

| Handling of Compositionality | Poor without specific transformation; highly susceptible to false positives [21]. | Improved with regularization (e.g., LASSO) and log-ratio transformations [21]. | Improved, as conditioning on other taxa can partially address confounding. |

| Key Assumptions | Linear relationship (Pearson); variables are bivariate normal for inference [22] [23]. | Linear relationship; residuals are normally distributed and independent [23]. | Multivariate normality of the data; a key property is that zero partial correlation implies conditional independence [26]. |

| Computational Demand | Low | Moderate to High | High |

| Robustness to Noise | Low; highly sensitive to outliers and spurious correlations. | Moderate; regularization provides some robustness. | Moderate; the conditional dependence framework is robust to indirect effects. |

| Primary Output | Correlation coefficient (e.g., r). | Regression coefficient (e.g., b). | Partial correlation coefficient. |

| Example Algorithms | SparCC [21], MENAP [21]. | LASSO (e.g., CCLasso) [21], fuser [12]. |

SPIEC-EASI [21], MGMRF [25]. |

| Cerium(III) isodecanoate | Cerium(III) isodecanoate, CAS:94246-94-3, MF:C30H57CeO6, MW:653.9 g/mol | Chemical Reagent | Bench Chemicals |

| Benz(a)acridine, 10-methyl- | Benz(a)acridine, 10-methyl-, CAS:3781-67-7, MF:C18H13N, MW:243.3 g/mol | Chemical Reagent | Bench Chemicals |

Synthesized Benchmarking Performance: Empirical evaluations consistently show that no single method dominates across all scenarios. A study comparing multinomial processing tree (MPT) models found that while regression approaches like latent-trait regression adequately recover parameter-covariate relations, correlations are often underestimated in homogeneous samples without proper correction [27]. In cross-environment predictions, novel regression-based algorithms like fuser—which uses a fused LASSO approach to retain subsample-specific signals while sharing information across environments—have been shown to outperform standard algorithms (e.g., glmnet). fuser reduces test errors by mitigating both the false positives of fully independent models and the false negatives of fully pooled models [12]. This highlights a key trend: methods that explicitly model the ecological context (e.g., spatial or temporal niches) tend to yield more accurate and biologically plausible networks.

Experimental Protocols for Benchmarking

Robust benchmarking requires standardized protocols to evaluate the quality of inferred networks. A significant challenge is the general lack of comprehensive, fully resolved interaction databases for microbial communities to serve as ground truth [11]. Researchers have therefore developed several computational and experimental strategies for validation.

1. Cross-Validation Frameworks: Cross-validation is a fundamental technique for assessing the predictive performance and generalizability of inference algorithms [12]. The Same-All Cross-validation (SAC) framework is a recent innovation designed to rigorously evaluate algorithm performance across diverse ecological niches [12]. The SAC protocol involves two distinct validation scenarios run over multiple folds (e.g., k=5 or k=10):

- "Same" Scenario: The dataset is partitioned, and the algorithm is trained and tested on data from the same environmental niche or habitat. This evaluates performance within a homogeneous environment.

- "All" Scenario: Data from multiple environments are pooled. The algorithm is trained on a fold containing this mixed data and tested on a held-out fold from the same pool. This tests the algorithm's ability to handle heterogeneous data.

The workflow for this protocol is detailed below:

2. Data Preprocessing Protocol: The quality of inference is heavily dependent on proper data normalization [12]. A standard preprocessing pipeline for microbiome count data includes:

- Transformation: Apply a log10(x + 1) transformation to raw OTU counts to stabilize variance and reduce the influence of highly abundant taxa.

- Subsampling: Standardize group sizes by randomly subsampling an equal number of samples from each experimental group to prevent bias.

- Sparsity Reduction: Remove low-prevalence OTUs (e.g., those present in only a small fraction of samples) to reduce noise.

3. Validation with Synthetic Communities: For absolute validation, studies have fully resolved the interaction network of synthetic microbial communities in vitro [11]. Mono- and co-culture growth data from these defined communities provides a biological benchmark against which the predictions of different algorithms can be directly compared to assess accuracy.

Research Reagent Solutions

The following table details key computational tools, datasets, and algorithmic approaches that form the essential "research reagents" for conducting microbial network inference and benchmarking studies.

Table 2: Key resources for microbial co-occurrence network inference research.

| Resource Name | Type | Primary Function / Characteristic | Relevance in Benchmarking |

|---|---|---|---|

| SparCC [21] | Software Algorithm | Infers networks based on Pearson correlation of log-transformed abundance data. | A baseline correlation method; performance often compared against more complex models. |

| SPIEC-EASI [21] | Software Algorithm | Infers networks using Gaussian Graphical Models (GGMs) to estimate conditional dependencies. | Represents the graphical model category; used to evaluate the value of conditioning on the full community. |

| glmnet / LASSO [21] [12] | Software Algorithm / Method | Infers networks using regularized linear regression (L1 penalty) to enforce sparsity. | A standard regression baseline; its performance is a common benchmark in studies [12]. |

| fuser [12] | Software Algorithm | A novel fused LASSO algorithm that shares information between habitats while preserving niche-specific edges. | Used to test advanced regression models that account for environmental context; shown to lower test error in cross-habitat prediction [12]. |

| HMPv35 [12] | Reference Dataset | 16S rRNA data from multiple human body sites; 10,730 taxa, 6,000 samples. | A benchmark dataset for evaluating algorithm performance on large, complex, but naturally derived communities. |

| MovingPictures [12] | Reference Dataset | Longitudinal 16S rRNA data from body sites of two individuals; 22,765 taxa, 1,967 samples. | Used to test algorithm performance in capturing temporal dynamics and stability of microbial associations. |

| SAC Framework [12] | Benchmarking Protocol | A cross-validation method to evaluate algorithm generalizability within and across environments. | Provides a standardized experimental protocol for comparative algorithm evaluation. |

The landscape of microbial network inference is methodologically rich, with correlation, regression, and graphical models each offering distinct advantages and limitations. Correlation methods provide a simple and intuitive starting point but are often prone to spurious results. Regression methods offer a more robust, predictive framework, with modern implementations like fuser demonstrating superior performance in ecologically complex scenarios. Graphical models hold the promise of identifying direct, conditional interactions but come with stringent data assumptions and high computational costs.

Current benchmarking efforts, facilitated by protocols like SAC and validation against synthetic communities, clearly indicate that the choice of algorithm is context-dependent. There is no universal "best" method. For researchers, the key is to align the methodological choice with the biological question and data structure. Future developments in the field will likely focus on integrating multiple data types (e.g., metabolomics), improving scalability for massive datasets, and creating more robust methods that explicitly account for spatial organization and temporal dynamics—areas that remain underexplored [11]. The creation of comprehensive, curated interaction databases will also be crucial for moving the field toward more reliable and predictive models of microbial community dynamics.

In the field of microbial ecology, correlation-based methods serve as fundamental tools for inferring potential interactions between microorganisms from abundance data. These methods help researchers construct association networks that can reveal cooperative, competitive, and symbiotic relationships within microbial communities. Among the most widely used approaches are Pearson correlation, Spearman correlation, and SparCC (Sparse Correlations for Compositional data), each with distinct mathematical foundations and applicability to different data scenarios [28] [29] [30].

The accurate inference of microbial networks is crucial for advancing our understanding of microbiome dynamics in various environments, including the human gut, soil ecosystems, and industrial bioreactors. Correlation-based approaches are particularly valuable because they can be applied to high-throughput sequencing data to generate hypotheses about microbial interactions that can later be validated experimentally [31]. The development of specialized methods like SparCC addresses unique challenges in microbiome data, such as compositionality, where relative abundances sum to a constant value, making traditional correlation measures potentially misleading [29] [30].

Benchmarking studies comparing these methods have become essential for guiding researchers in selecting appropriate tools for their specific data characteristics and research questions. The performance of Pearson, Spearman, and SparCC can vary significantly depending on factors such as data sparsity, diversity levels, network density, and the presence of technical artifacts like excessive zeros in count data [29] [32]. Understanding the strengths and limitations of each method is paramount for drawing accurate biological inferences from microbial association networks.

Theoretical Foundations and Mathematical Formulations

Pearson Correlation Coefficient

The Pearson correlation coefficient measures the linear relationship between two continuous variables, assessing how a change in one variable is associated with a proportional change in another variable [28] [33]. It operates on the actual values of the data rather than ranks and is defined as the covariance of the two variables divided by the product of their standard deviations. The Pearson correlation coefficient (r) ranges from -1 to +1, where values close to +1 indicate a strong positive linear relationship, values close to -1 indicate a strong negative linear relationship, and values near 0 suggest no linear relationship [28].

For variables X and Y, the Pearson correlation is calculated as:

r = Σ[(Xᵢ - X̄)(Yᵢ - Ȳ)] / √[Σ(Xᵢ - X̄)² Σ(Yᵢ - Ȳ)²]

where X̄ and Ȳ are the sample means of X and Y, respectively. The Pearson correlation assumes that both variables are normally distributed, the relationship is linear, and the data are homoscedastic (constant variance along the regression line) [33]. In microbial ecology, Pearson correlation is sensitive to the compositionality of data and can be influenced by outliers, which are common in amplicon sequencing datasets [30].

Spearman Rank Correlation Coefficient

The Spearman rank correlation coefficient evaluates monotonic relationships between two continuous or ordinal variables, assessing whether the variables tend to change together, though not necessarily at a constant rate [28] [33]. Unlike Pearson, Spearman correlation is based on the ranked values for each variable rather than the raw data, making it a non-parametric method that doesn't assume normal distribution of the data [28].

For variables X and Y, the Spearman correlation coefficient (Ï) is calculated as:

Ï = 1 - [6Σdᵢ²] / [n(n² - 1)]

where dáµ¢ is the difference between the ranks of corresponding variables, and n is the number of observations. Spearman correlation is less sensitive to outliers than Pearson correlation and can detect monotonic nonlinear relationships [33]. This makes it particularly useful for microbial data that may not meet normality assumptions or when the relationship between microbial abundances follows trends that are consistent in direction but not necessarily linear [33] [34].

SparCC (Sparse Correlations for Compositional Data)

SparCC is specifically designed to estimate correlation networks from compositional data, which is characteristic of microbiome datasets where sequencing results represent relative abundances rather than absolute counts [29] [30]. The method uses a log-ratio transformation of the relative abundance data to overcome compositionality constraints [30]. SparCC is based on the concept that the variance of the log-ratio between two components in a composition can be expressed in terms of the variances of the log-transformed original components [30].

The key innovation of SparCC is that it leverages the sparsity typical of microbial ecosystems, where most species do not interact with one another [30]. The algorithm iteratively approximates the correlation network using the relationship:

Var(log Xáµ¢/Xâ±¼) = Var(log Xáµ¢) + Var(log Xâ±¼) - 2Cov(log Xáµ¢, log Xâ±¼)